You can create HTTP requests pretty easily and parse the results however you need to. I particularly like using the Apache HTTPClient library. The site also has a simple get started example but here is an SSCCE from Mykong: import java.io.IOException Some of the most important tasks for which linkextractor is used are below To find out calculate external and internal link on your webpage. It is 100 free SEO tools it has multiple uses in SEO works. Manipulate the HTML elements, attributes, and textĬlean user-submitted content against a safe white-list, to prevent XSS attacks Extract all links from a website link extractor tool is used to scan and extract links from HTML of a web page.

Article url extractor full#

Article url extractor code#

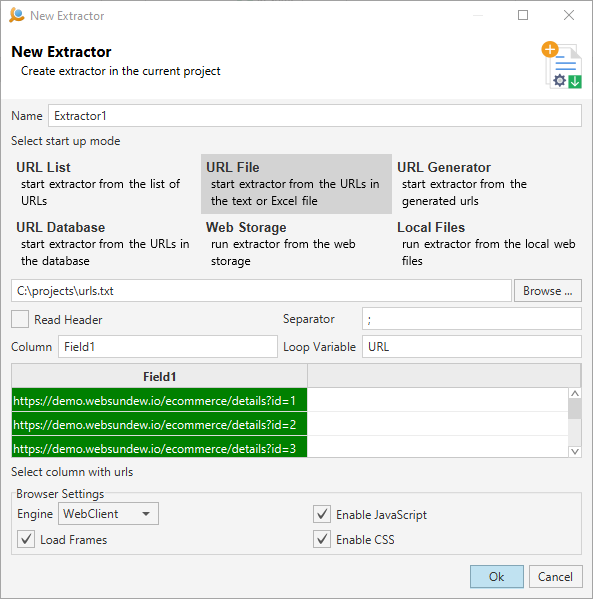

Uses the latest Cocoa multi-threading technology, no legacy code inside.Extracts Web address, FTP address, email address, feed, Telnet, local file URL, news.Google extraction from specific international Google sites with URL extraction more focused on individual country and language.If you want to remove duplicate URLs, please use our Remove Duplicate Lines tool. This tool extracts all URLs from your text. It works with all standard links, including with non-English characters if the link includes a trailing / followed by text. Extracts on search engines starting from keywords and navigating in all the linked pages in an unlimited navigation from one page to the successive, all this just starting from a single keyword About URL Extractor This tool will extract all URLs from text.Extracts directly from the Web cross navigating Web pages in background. This paper presents an extraction method of website information based on DOM to improve the searching efficiency, which only to preserve the theme information.

The Article Extraction API automatically extracts and returns clean news articles and text articles from. Extracts from multiple file inside a folder, to any level of nesting (also thousand and thousand of files) Automatically extract articles from any web page.It can navigate for hours without user interaction in Web extraction mode, extracting all the URLs it finds in all the Web pages it surfs unattended or starting from a single search engine using keywords, looking in all the resulting and linked pages in an unlimited navigation and URL extraction.

Article url extractor series#

You can also specify a series of keywords then it searches Web pages related to the keywords via search engines, and starts a cross-navigation of the pages, collecting URLs. It allows the user to specify a list of Web pages used as navigation starting points and going to other Web pages using cross-navigation. Or the extracted data can be saved on disk as text files ready to be used for the user purposes. And once done, it can save URL Extractor documents to disk, containing all the setting used for a particular folder or file or Web pages, ready to be reused. It can also extract from a single file or from all the content of a folder on your HD at any nested level. It can start from a single Web page and navigate all the links inside looking for emails or URLs to extract, and save all on the user HD. URL Extractor is a Cocoa application to extract email addresses and URLs from files, from the Web, and also looking via search engines.

0 kommentar(er)

0 kommentar(er)